Geometric Deep Learning - From Euclid to Drug Design

- Christina Zhang

- Mar 6, 2021

- 4 min read

Introduction to Professor Michael Bronstein

Professor Michael Bronstein specialises in theoretical and computational geometric methods for machine learning and data science. His research interests lie on a broad spectrum of applications ranging from computer vision and pattern recognition to geometry processing, computer graphics, and biomedicine. Professor Bronstein completed his PhD with distinction from the Technion (Israel Institute of Technology) in 2007. He is also the co-founder Fabula AI, Videocities, and Novafora. In addition to his academic career, he currently serves as the Head of Graph Learning Research at Twitter and a Scientific advisor at Relation Therapeutics.

He joined the Department of Computing at Imperial College London in 2018 as a professor and simultaneously held the Chair in Machine Learning and Pattern Recognition. He has also served as a professor at USI Lugano, Switzerland since 2010, and further held visiting positions at Stanford, Harvard, MIT, TUM and other prominent universities.

The beginning: Geometry

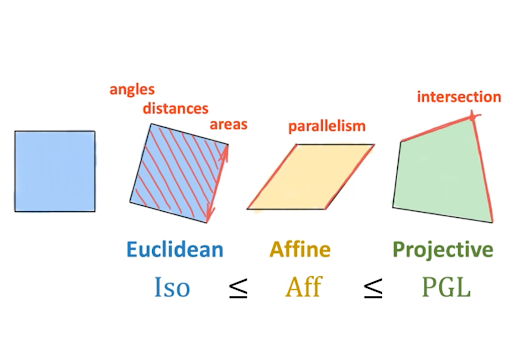

Until 1832, we were only aware of one type of geometry: Euclidean. However, since then, we’ve made discoveries over a range of different others, including non-Euclidean (angles in a triangle don’t add up to 180 degrees), elliptic, hyperbolic, projective and more.

Felix Klein proposed in his Erlangen Programme (1872) that we should approach geometry as the study of (in)variants, or symmetries using the language of group theory. These ideas were intrinsic to develop modern physics, especially the Standard Model. For instance, Emma Noether’s first theorem was built on this, stating that every differentiable symmetry of the action of a physical system has a corresponding conservation law. One important example, which we are all familiar with today, is the conservation of energy.

Much of machine learning has been concentrating on models that use Euclidean geometry as their basis. Though many advancements have been made in the past decades, the limitations in using just Euclidean geometry were also gradually showing.

On the other hand, deep learning, a field of machine learning that deals with algorithms which mimic the structure and function of complicated neural networks, started to develop. Geometric Deep learning is a subfield of machine learning that enables learning from complex data like graphs (networks) and multi-dimensional points. It aims to generalise neural network models to non-Euclidean domains such as grids, graphs and manifolds - it has revolutionised data science. Multiple layers of processing are used to extract progressively higher level features from the input data. Furthermore, learning can be supervised, semi-supervised or unsupervised. We now have a range of architectures to analyse data sets, such as Convolutional Neural Network (CNN), aka shift invariant or space invariant artificial neural network.

Artificial Neural Networks

Here is an example of the simplest neural network. Multilayer perceptron can approximate a continuous function to any desired accuracy. However, we’ve learned the curse of dimensionality, which is that as the dimension grows, the number of samples needed grows exponentially which creates a certain level of difficulty. Irregular data structures such as molecules or social networks need to be dealt with accordingly.

Graph is a classic non-Euclidean domain, for instance a social network. We typically demonstrate them using Euler’s graph theory, which simplifies any complex structures down to nodes connected by edges. Nodes have features, each could be a vector in different dimensions, and an arbitrary order of nodes is often used. The machines should also be of various variance or equivalence for it to produce the desired product.

We can apply the Weisfeiler-Lehman test to see if two graphs are isomorphic, which means to see if there exists an edge preserving bijection between the graphs.

Application of geometric deep learning

Application of geometric deep learning includes predicting the binding site of proteins to create target drugs that are complementary specifically to that binding site, hence it’s effective in producing stronger drugs that target cancerous sites; or vaccines that target the shape of foreign bodies, such as antigens on pathogens like bacteria or viruses.

It’s important to apply geometric deep learning in drug discovery and design, as we want to make the production cheaper and faster. Originally this could be very challenging as different stages of producing drugs, designing, creating, testing etc. could all take a long time. Especially, we would only be able to test a handful in the labs, but with virtual testing, we can run through a whole database in hours.

We can also use geometric deep learning in discovering new antibiotic compounds given the current situation of constant rising ‘superbugs’ that have evolved against most known antibiotics. Without new compounds, it could be hard for patients to fight off infections in the future as the pathogens would not respond to any of our current antibiotics.

Geometric deep learning can also be used in drug repositioning and combination therapy, as well as predicting how a drug would interact with a target molecule, hence getting a better idea of potential side effects. This could improve the quality of life for many patients as lots of them suffer from the side effects of their medications that, despite their life-saving outcome, are drastically decreasing their physical and psychological well-being.

Conclusion

geometric principles underlie a large class of deep learning methods

inductive bias and architectures for non-euclidean data

learnable local operators similar to convolution

state of the art results with several success stories

many fundamental open questions await answers, hence a very exciting field

.png)

Comments